TL;DR

We developed an alpha version of a browser-based 3D shooting game. We are all so excited about it because the shooting game purely runs inside a browser and the scene is georeferenced to the real world. We were able to build it fast because we leveraged some awesome open source projects, including CesiumJS, 3D Tiles, GraphQL, Angular and NodeJS.

The alpha version of the game is online. Come shoot us, here.

The game is open source. Our github repo begs for stars, here.

The Big Bang

“Is it possible to develop an in browser, georeferenced, multiplayer, 3D first person shooting video game?”

I remember myself asking our brainiest engineer that question 5 years ago.

And his answer? You have never seen a brainy engineer mumble, fumble and bumble in a more heroic way:

“Well, it is software after all”, he said, “everything is possible with software”.

“But then again”, he kept outsmarting me, “the question is not if it’s possible. Is it feasible…?”

“You’ll need to develop a javascript based 3D rendering engine, attach it to the real world with complicated geodesic projection calculations, develop a software layer to control the characters dynamics, solve the problem of real time low-latency state and data updates for multiple players over http, solve the synchronization problem, find a way to stream enormous amounts of 3D scene data to the clients, integrate and debug those complicated pieces of software altogether, and wallah… there you have it, you got yourself an in browser georeferenced multiplayer shooting game…”.

“Thanks a lot, Sheldon Cooper”, I grumbled out loud. But like any manager in the history of software development, I couldn’t restrain myself and asked: “What would it take to build it? Give me your best estimation… How much effort will it take to develop such a game…?”

Our Sheldon didn’t hesitate: “One and a half gazillion software development man-years. One point three gazillion if you manage to hire the most brilliant engineers in the industry…”

Bang. Big Bang. No GeoStrike for me…

How to climb a giant wall? Start by standing on the shoulders of giants

Back then, five years ago, I understood that there is a giant wall standing between us and the implementation of GeoStrike, what I thought was an outstanding idea. Such a project requires enormous resources. Resources that are expandable only in a google-scale company.

I was wrong. I dramatically underestimated the power of the open source wave. Five years later, we built a proof of concept (POC) of exactly that, an in browser georeferenced 3D multiplayer shooting game. And it took us less than three months to build it.

The open source wave and culture broke those walls for us, In parallel. In this post I will describe GeoStrike Alpha – the video game proof of concept we have developed by exploiting the power of some awesome open source libraries, standards and frameworks – including CesiumJS, Angular, 3D Tiles and Apollo GraphQL.

GeoStrike? What? Why?

Let’s start from the beginning. When we say an in browser, georeferenced, 3D shooting game – what do we mean and why are we so excited about it? Let’s look at our fundamental requirements:

- In browser. No installations and plugins are allowed. In browser equals scale. Our game can reach anywhere the internet reaches. Furthermore, we require a pure web, ‘native’ implementation. Down goes all the non-javascript, compilation-based frameworks. Down goes Unity. That’s a harsh requirement. There aren’t many good browser based shooting video games.

- Georeferenced. Traditional 3D game engines usually model the game-world as a simple, virtual, uniform 3D space and use a simple X-Y-Z cartesian coordinates system. Their computer graphics modules transform those virtual 3D game-scene objects to the 2D screen pixel space precisely and efficiently. We required exactly that, but added a flavoursome additional requirement. Objects in our 3D game-world should also be georeferenced, meaning – they should be related to a real place in our real world.

This opens the way for some exciting new use-cases for a shooting game. Think about it – if objects in our game space can be related to places in the real world, and if places in the real world can be related to objects in the game space – then we can use all of the planet’s geo-tagged (big) data as an endless supply for our video game. We can go shooting in the streets of New-York. We can integrate real-world real-time objects into our game scene. Think of airplanes, quads, cabs, satellites, cell phones, roads, 3D city models – all can be streamed in real time to our clients. We can augment the real world with our game world and vice versa. This augmentation has endless potentials to be unleashed. Think of ‘Pokemon Go’ on steroids. Think of defense and security agencies being able to simulate a real operational scene prior to any boots on the ground.

But georeferencing doesn’t come easy. The fact that we live on the surface of a weird spherical – oval – egg shaped planet – brings to the table all sorts of bizarre concepts, such as ‘Geoid’, ‘Ellipsoid’, ‘Datum’, ‘Photogramatery’, ‘Terrain’, and their retrospective formulas. No wonder traditional 3D game engines don’t georeference…

- Shooting game. A first person shooting game. We’ll need the game state to be distributed to and from our clients in real time with low latency, so that the gaming experience will be as fluent as possible. We’ll need a way to measure distances and calculate collisions between objects, so that players won’t run through walls, and so that one player’s boom will immediately become the other player’s bang. We’ll need a way to model the dynamics and kinematics of our game objects, so our game engine will understand that birds and quads (and superheroes?) can fly, people can walk and run but can’t fly, cars and and trucks can both drive but in different conditions and different max speeds and so on. All in browser. All georeferenced.

Geo Who?

So we at Webiks turned to our partners at ‘The Guild’ and decided to prototype together. Webiks is a software development company that specialize in high-end data analysis and real time situational awareness web based applications. The Guild is an organization of freelance software engineers. They are talentful, they love coffee, they specialize in GraphQL and CesiumJS and they code at the speed of light.

Here is what we got so far. GeoStrike alpha.

Geo How?

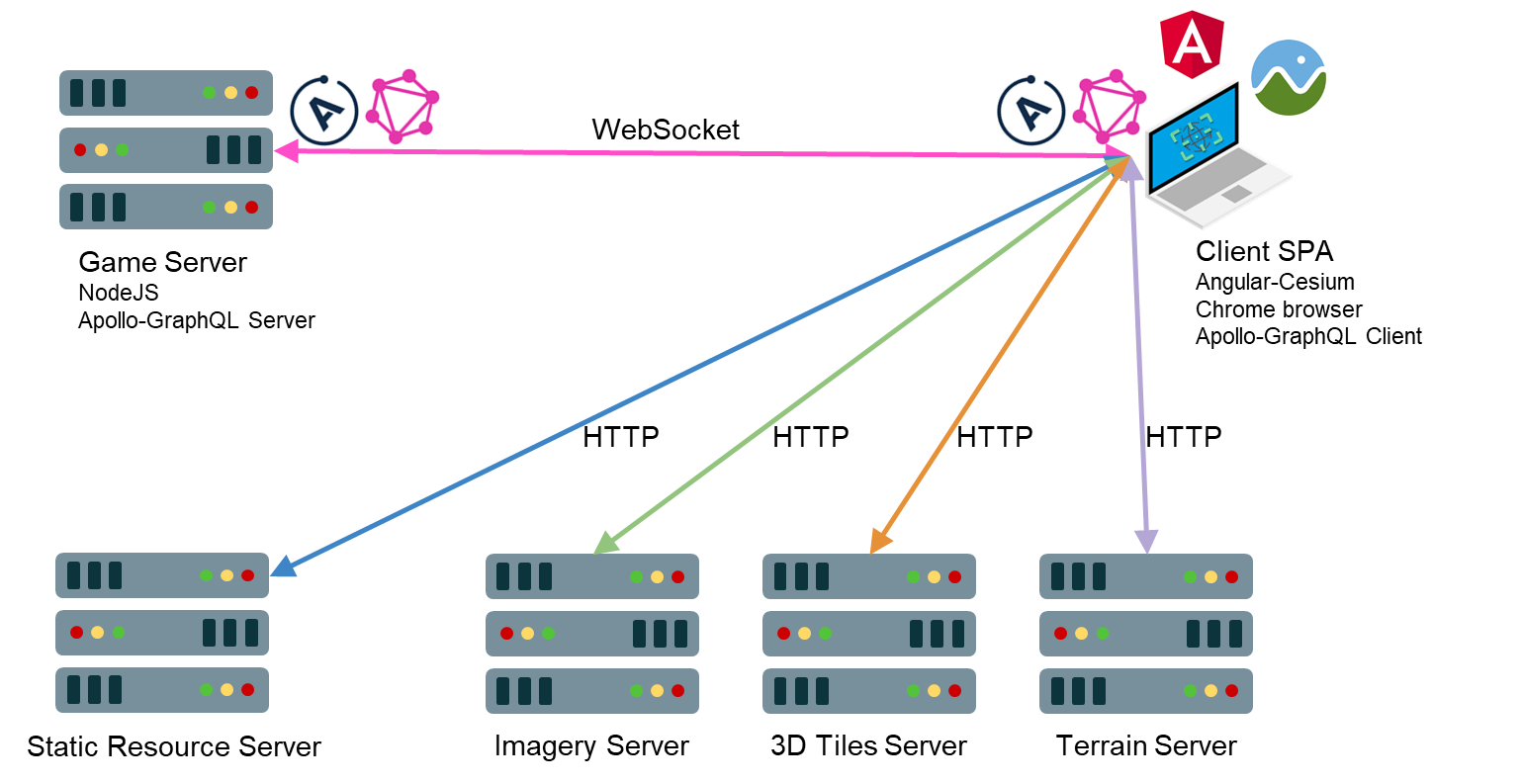

The general architecture is a web oriented microservices architecture.

The client app is a single page application (SPA) built with Angular and CesiumJS. Angular manages the UI logic. CesiumJS is an AGI sponsored open source javascript 3D virtual globe, leveraging the power of WebGL, enthusiastically adopted by the open source community. The game itself happens inside a CesiumJS canvas – Cesium renders the world for us, we just needed to implant and update the relevant characters and objects in the scene and manipulate Cesium’s camera to provide the first person view. To bind the two we used angular-cesium, an open source javascript library developed by the folks from The Guild, optimized for real time high performance applications.

The app’s static files and some additional static sources are served from the static resource server via http.

The 3D scene of the game is a composition of three layers, each layer is streamed dynamically to the client via a separate http endpoint:

- The terrain server feeds the client with data regarding the 3D shape of the earth in the game scene. We used AGI’s publicly available STK World Terrain endpoint.

The imagery server serves satellite imagery in a raster format. Those images are draped on the terrain to provide a realistic scene. We used Microsoft Bing maps.

The 3D Tiles server streams the 3D models of the buildings. We used Cesium’s publicly available New York City 3D tileset.

CesiumJS takes care of the terrain – imagery – 3D Tiles orchestration for us. We didn’t have to do a lot here.

The NodeJS game server is in charge of all the game logic that is done in the server side. It creates and manages the game ‘rooms’, it simulates the movement of the non playing characters (grandpas and humvees) and serves as the single source of truth for the game state.

The clients and the game server interact in real time. Apollo GraphQL is used in both sides. GraphQL helps us to explicitly model the game ontology by using GraphQL schema. Players, non playing characters, bullets – are all entities in our GraphQL game schema. By using GraphQL subscriptions, clients subscribe for real time updates of the game state from the game server, and get the positions and states of all other players and characters in real time. Apollo GraphQL manages the web-socket for us, we didn’t have to deal with all the socket connection management hassle. By using GraphQL mutations clients update the state of the characters they control.

Geo Ouch. Problems and Solutions

While developing GeoStrike alpha we dealt with some interesting challenges:

1. We wanted the gaming experience to be as fluent as possible, so we had to gently balance the client-server server-client communication trade-offs. On the one hand, we wanted our clients and servers to shoot an update immediately when the state changes (‘shoot’ an update, haha). On the other hand, we didn’t want to overwhelm our sockets and our parsers with too many too rapid updates. In the single threaded javascript world, this can immediately lead to crashes and rendering lags. Think of it – we have multiple players and hundreds non playing characters, all move freely in the 3D space (walk, turn, shoot), and we want every movement to be immediately pushed to all other clients so they can render its consequences in real time. Challenge accepted.

We ended up developing the following mechanism:

The game server and the clients each execute a game update loop. Events between update loop intervals are buffered and published only upon tick. The game server publishes the actual game state to all clients once every server interval. Each client publishes its authoritative changes, i.e. the changes in the state of the character he controls, via GraphQL mutations, once every client interval.

In our current implementation we set the client and the server update loop intervals to 200ms. We found this setup to provide a good balance, prevent our socket from being jammed and delay its message handling but at the same time allow state changes to flow fast enough between players.

However, we wanted the rendering and players movement to be smooth. If we waited 200ms before every rendering update, characters will start “leaping”. We don’t want our spiderman to leap like a frog, don’t we? Cesium to the rescue. Cesium api has a built in linear interpolation mechanism. We allowed Cesium to interpolate the position of our characters between every two update loop ticks. Our superheros once again can float smoothly in the world, like they deserve.

2. We are not only shooting wabbits in Elmer Fudd’s woods. GeoStrike players should be capable of playing the shooting game in every possible spot on the globe. Including urban areas. Urban areas create two more complications. First, our characters should not be able to walk through walls and buildings. Second, they should be able to actively step inside a building. Not only Wolverine should walk in the streets of New York, he should be able to step into a building, look outside the window, and shoot at that loitering Bruce Wayne.

Cesium 3D Tiles provides us with the 3D models of the New York buildings. When a player approaches a building, we want our game engine to recognize the approach and calculate the distance. The natural implementation would be to measure the distance between our player and the bounding boxes of the nearest buildings. If that distance becomes small enough, the game engine should prevent that player from walking through, but should suggest the player to step-in. However, we discovered that the bounding boxes of the buildings in the New York dataset aren’t precise enough. While that might be improved in the future, we wanted a proof of concept now, so we improvised.

Cesium has a built in ‘pick-ray’ method, which picks the objects an imaginary ‘ray’ cast from the camera to the virtual globe intersects. We used this feature to calculate the distance between our player and the wall of the nearest building in his line of sight. While this hack works fine, it has one main drawback – it can only be implemented along the character’s line of sight. So for now our players can only move straight forward, because if we let them move sideways we won’t be able to prevent them from walking through walls. This should definitely be improved in the future.

3. After implementing the first person view of the game, we still felt something is lacking. We wanted to add a ‘top view’, or a map view, a view which displays all our players on a map. We thought this feature can have many future use cases. The players in the same team can use it to coordinate their tactics. A future additional character type can be added, a ‘commander’. We can simulate the commander seating in his war-room, monitor the activity of his subordinates on the ground, shouting commands…

The implementation of the map view feature was slick as a whistle. Our entire game scene is georeferenced, after all, so all we had to do was to set Cesium’s camera parameters to top view parameters and change the characters from 3D realistic models to pictographic elements (we used cesium entities). Cesium and Angular-Cesium gave us that feature for free.

4. Shooting sound. When a player shoots his rifle, we want him to hear the bang. That’s easy and straightforward, we used HTML5 sound API. However, we also wanted the other active players to hear that bang, and the closer it is the louder it should sound. As Cesium doesn’t support 3D sound, we implemented a simple shooting sound heuristic. The shot was defined as a GraphQL entity in our schema, and every time a player shoots, an update from the client to the server was sent via GraphQL mutation. That mutation triggered the shots subscription for all other players, so the gunshot event was published and pushed to them. The sound shot volume was then set lineraliy according to the distance between the player and the shooting location.

Geo Bright. What’s Next?

We see a bright future for GeoStrike. While the alpha version of the game is more of a proof of concept than an actual video game, we see a huge potential for a full scale in browser georeferenced multiplayer 3D shooting game.

Here are some features we consider tackling next:

- Add more characters, vehicles and weapon types. Allow batman to pick up a jetpack and lift for a short trip above the city of New York. Add airplanes and UAVs and yellow cabs. Add weapon types such as bombs that can blast entire buildings.

- Allow a free real time communication between players from the same team and with their commanders. We consider both chat and voice support.

- Improve the scene rendering itself – higher resolution raster, textured 3D buildings, better looking characters.

Make the dynamics of the characters more realistic. Our superheros currently all seem to ride hoverboards in the streets of New York. We consider adding part-of-body based animations. - Go underground? Should we allow our superheros meet New York sewers’ Ninja Turtles?

Augment GeoStrike with data feeds of real time real objects. Wouldn’t it be cool if GeoStrike will simulate the actual traffic jams of the streets of New York? - Add a dynamic filtering layers to our publish-subscribe mechanism. Our current pub-sub implementation is relatively simplistic. The state of all the players and all the non playing characters is pushed to all the players, regardless of their position. However, we don’t necessarily have to push all this data at the same rate and priority. Distant characters can be updated less frequent.

Geo Out

We plan to make all the efforts to keep developing and improving GeoStrike.

Want to contribute? Come say hi at our github repo.

Have a question, remark, a wonderful suggestion? This blogpost comments section is open and waiting for you.

Want to do business with us? Contact us at [email protected].